Blog Series

Insights and case studies from AI-Driven Research Systems (ADRS).

Automating Algorithm Discovery: A Case Study in Optimizing Attention Mechanisms with Skylight

by: ADRS Team, January 29, 2026

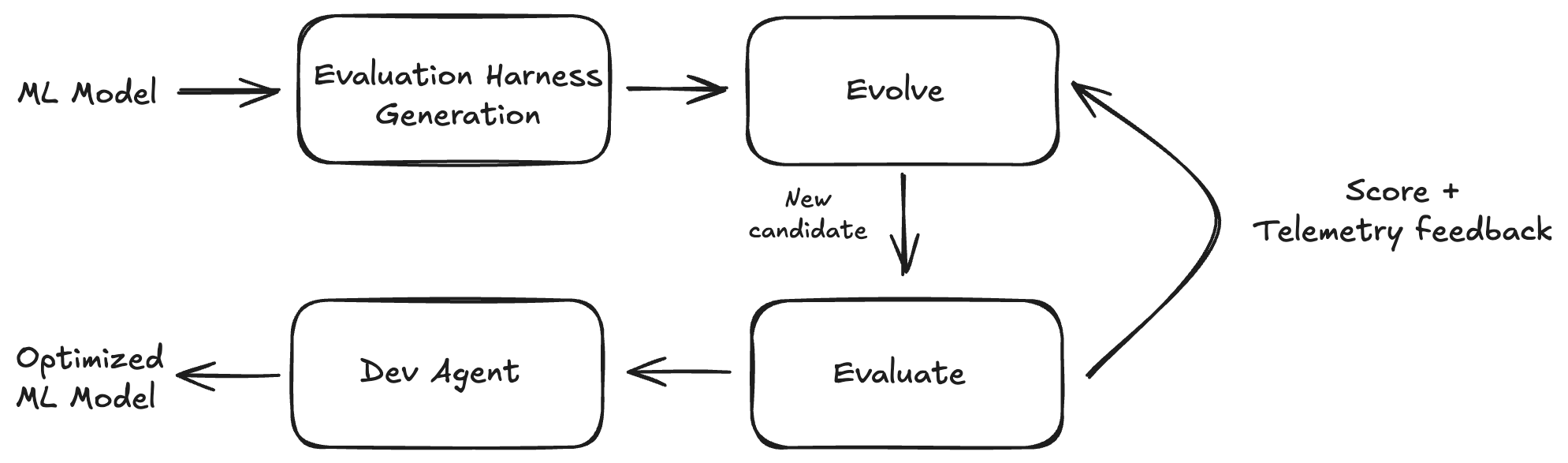

This post is the twelfth in our ADRS series, where we use AI to automatically discover better algorithms for real-world systems problems. In this blog, we study sparse attention for accelerating decoding in Large Language Models (LLMs). The goal is to reduce the memory traffic and latency during the decoding phase, which is a fundamental bottleneck in LLM inference. We explore how AI, specifically a Cursor agent within the SkyLight framework, can evolve towards state-of-the-art solutions like vAttention.

Automating Algorithm Discovery: A Case Study in Multi-Cloud Data Access with Cloudcast

by: ADRS Team, January 22, 2026

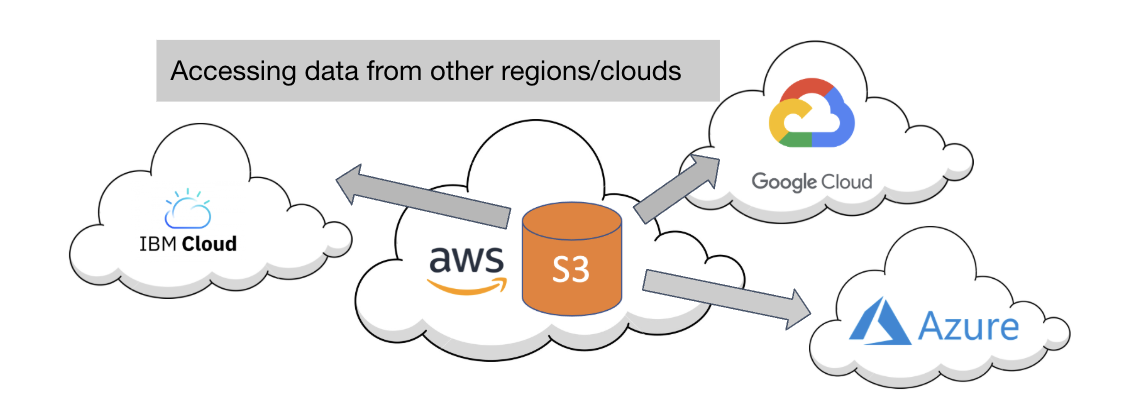

This post is the eleventh in our ADRS series, where we use AI to automatically discover better algorithms for real-world systems problems. In this blog, we tackle the challenge of efficiently accessing data across multiple cloud providers and regions, previously addressed by Cloudcast. By leveraging ADRS to evolve scheduling policies, we discover novel algorithms that minimize latency and cost when accessing data from different clouds.

Automating Algorithm Discovery: A Case Study in Policy Optimization with Bauplan

by: Bauplan & ADRS Teams, January 15, 2026

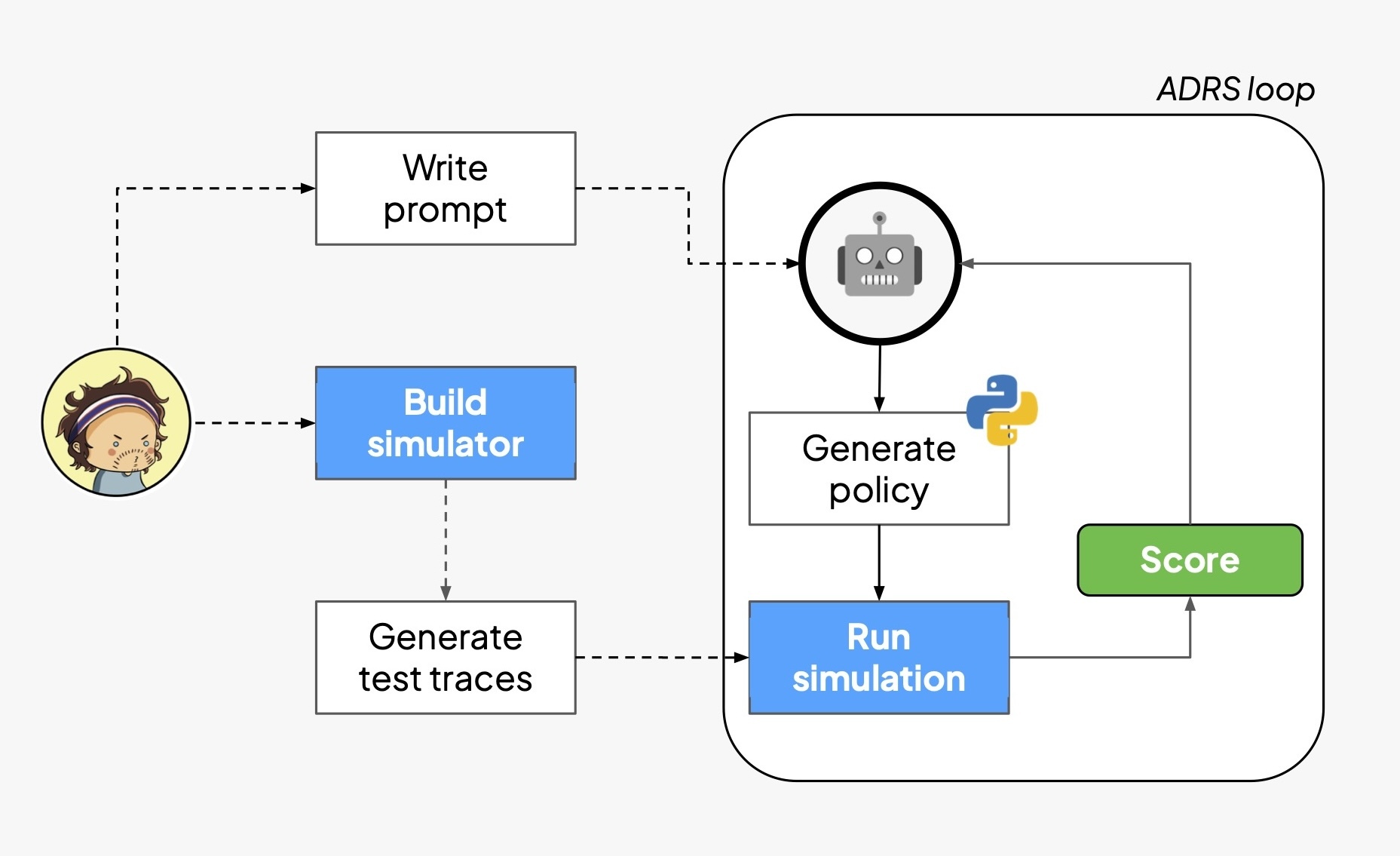

This post is the tenth in our ADRS series, where we use AI to automatically discover better algorithms for real-world systems problems. In this blog, we partner with Bauplan to explore how ADRS can optimize policy generation for data pipeline systems. By combining simulation-driven evaluation with an evolutionary search loop, we demonstrate how AI can iteratively refine scheduling policies and configuration parameters, achieving significant performance improvements over hand-tuned baselines.

Automating Algorithm Discovery: A Case Study in Optimizing LLM Serving with Prism

by: ADRS Team, January 8, 2026

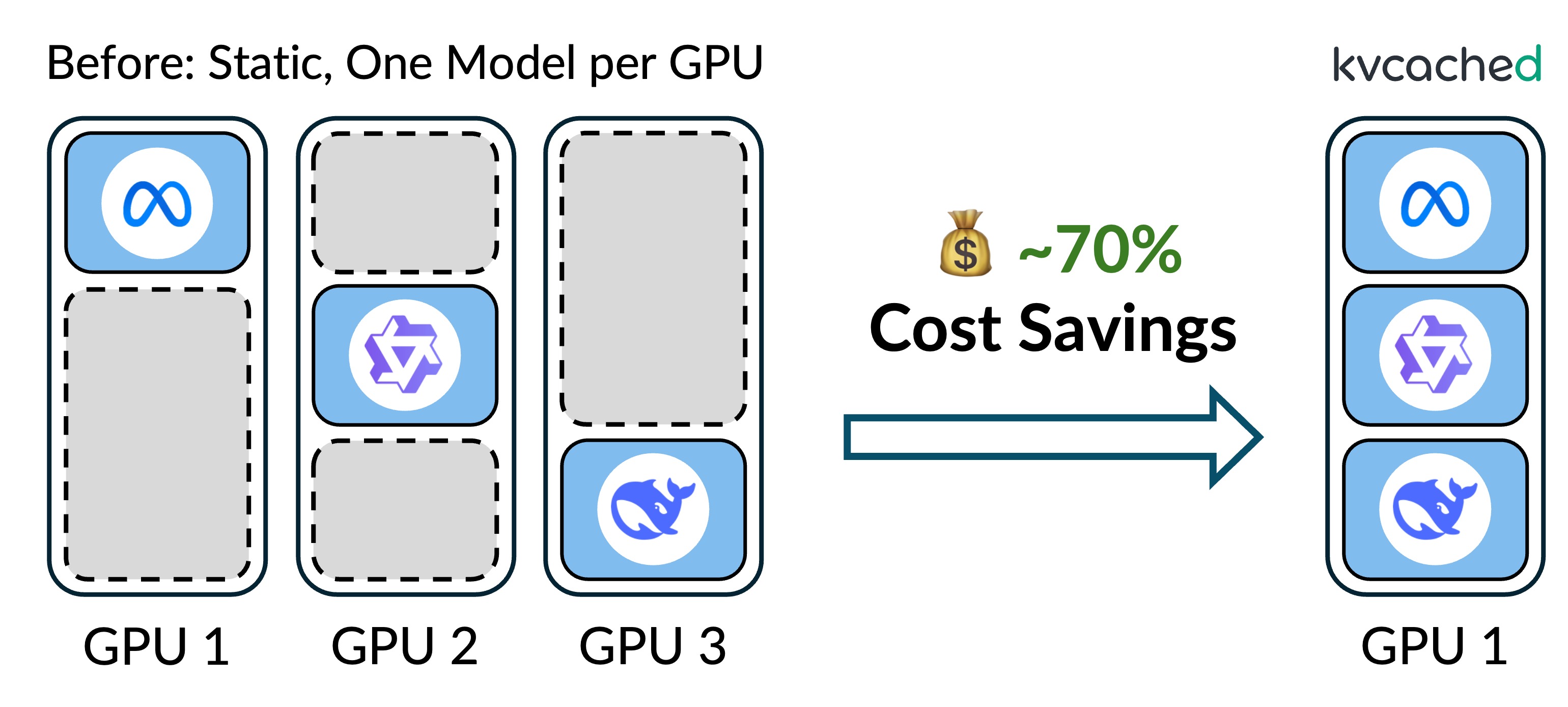

This post is the ninth in our ADRS series, where we use AI to automatically discover better algorithms for real-world systems problems. In this blog, we explore the challenges of managing GPU memory for LLM inference serving. We highlight Prism, a system that optimizes memory management and model placement. By leveraging an exact-caching mechanism and optimized scheduling, Prism achieves ~70% cost savings compared to static assignments, allowing for significantly higher throughput and efficiency in serving workloads.

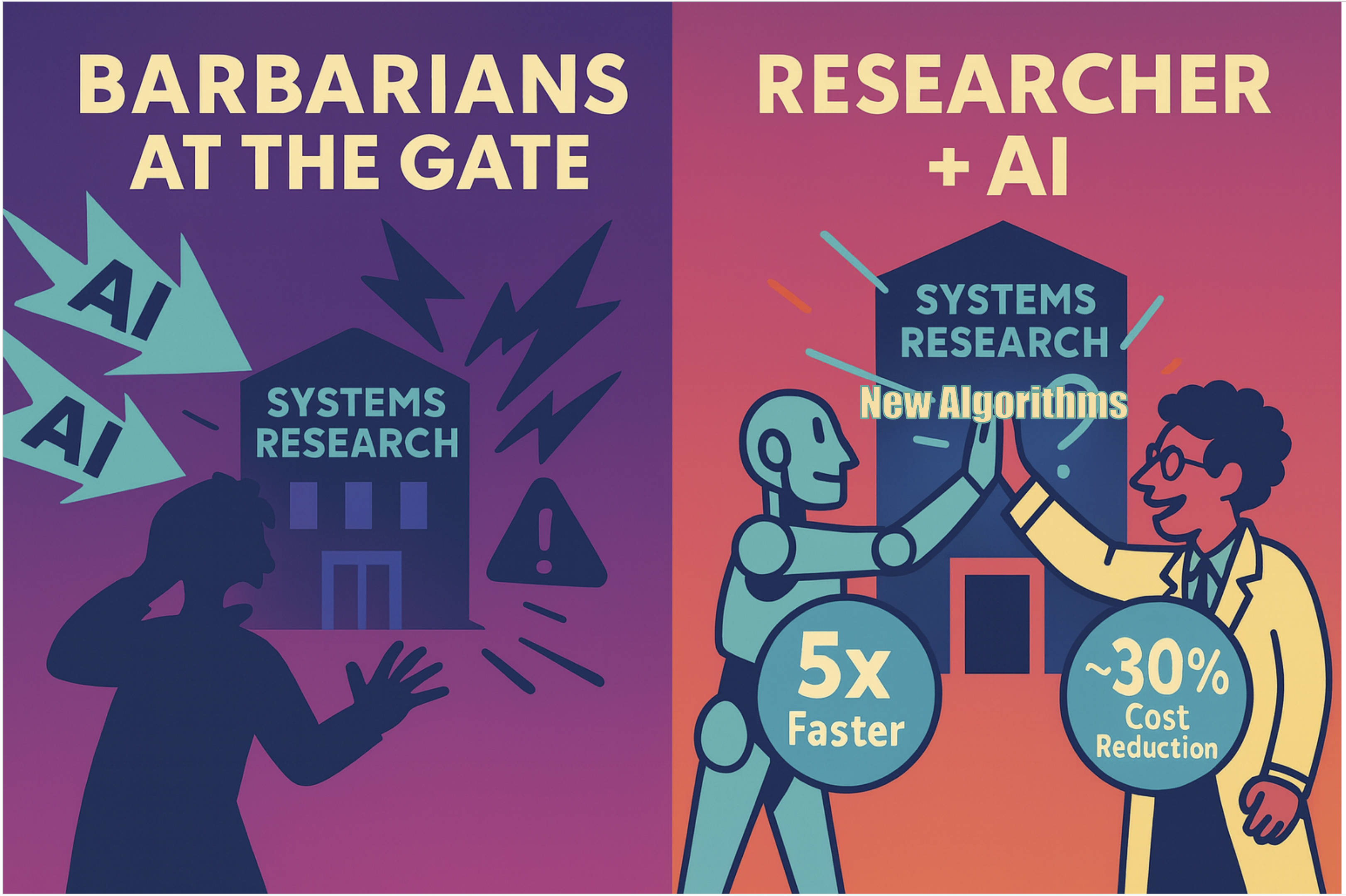

Let the Barbarians In: How AI Can Accelerate Systems Performance Research

by: ADRS Team, January 2, 2026

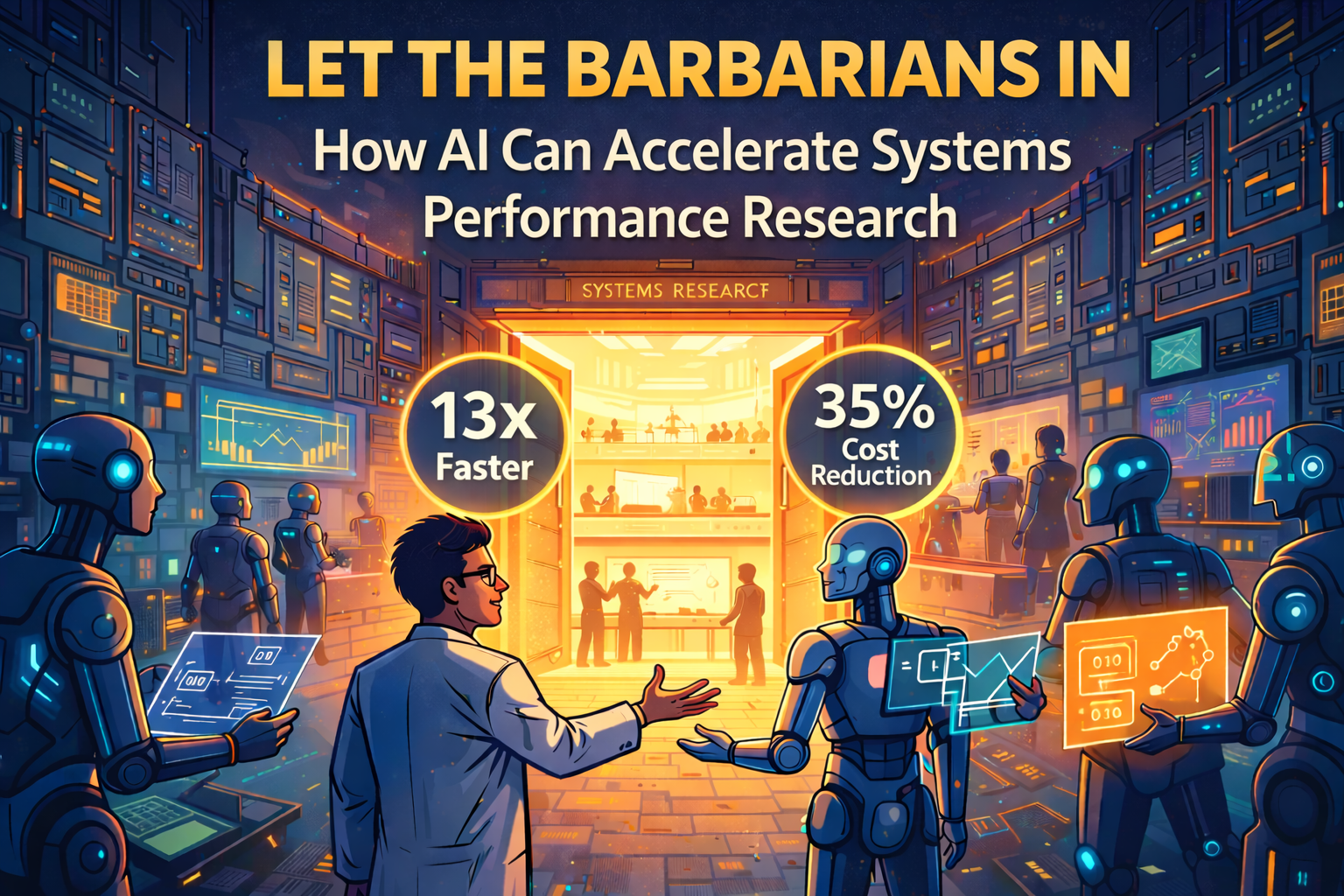

This post is the eighth in our ADRS series, which expands upon our work on AI-Driven Research for Systems (ADRS). We evaluate three open-source frameworks across ten real-world research problems, demonstrating their ability to generate solutions that outperform human experts, including a 13x speedup in load balancing and 35% cost savings in cloud scheduling. Based on these findings, we outline best practices for problem specification, evaluation, and feedback, providing a roadmap for applying these tools effectively.

Automating Algorithm Discovery: A Case Study in Improving Multi-Agent System Design using MAST

by: ADRS Team, December 15, 2025

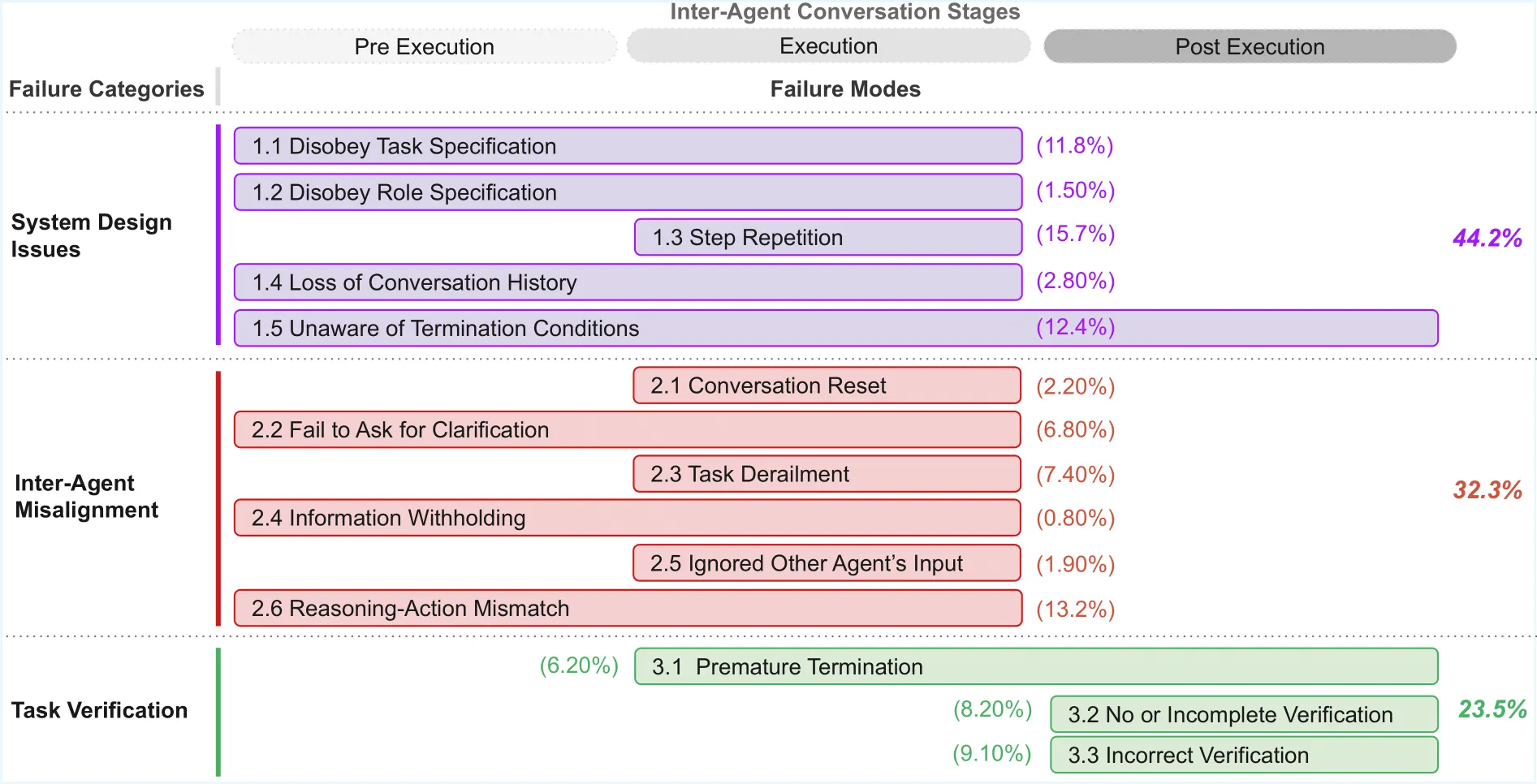

This post is the seventh in our ADRS series, where we use AI to automatically discover better algorithms for real-world systems problems. Designing effective multi-agent systems typically requires debugging workloads via execution logs and iteratively refining the agentic systems’ behavior. Previously, we demonstrated how the MAST Annotator provides scalable, systematic feedback on failure modes to guide agents builders to make design improvements. However, that approach still relied on hand-crafted solutions and implementations. In this blog, we replace hand-tuning with OpenEvolve to optimize the Multi-Agent System (MAS) code directly. By leveraging MAST feedback, OpenEvolve continuously mutates the architecture, automatically converging toward a more reliable system, improving failure rates by 7x.

BitsEvolve: Self-Optimizing GPU Code Generation at Datadog

by: Datadog & ADRS Team, December 4, 2025

This post is the sixth in our ADRS series, where we explore how AI can be applied to systems research. We feature exciting work from Datadog this week! In this blog post, we examine the problem of generating production-ready, optimized GPU code from an evolutionary search perspective. Specifically, we share results from BitsEvolve, an ADRS framework built at Datadog that targets various modalities ranging from optimizing hotspots in CPU-bound code to policy/configuration tuning for applications like inference serving frameworks (e.g. vLLM), Load Balancers, Garbage Collectors and more. Through profile guidance and robust evaluation mechanisms, we show how BitsEvolve-generated code can outperform compiled models, achieving speedups of up to 1.6x with reasonable search costs.

Autocomp: An ADRS Framework for Optimizing Tensor Accelerator Code

by: Autocomp & ADRS Teams, November 20, 2025

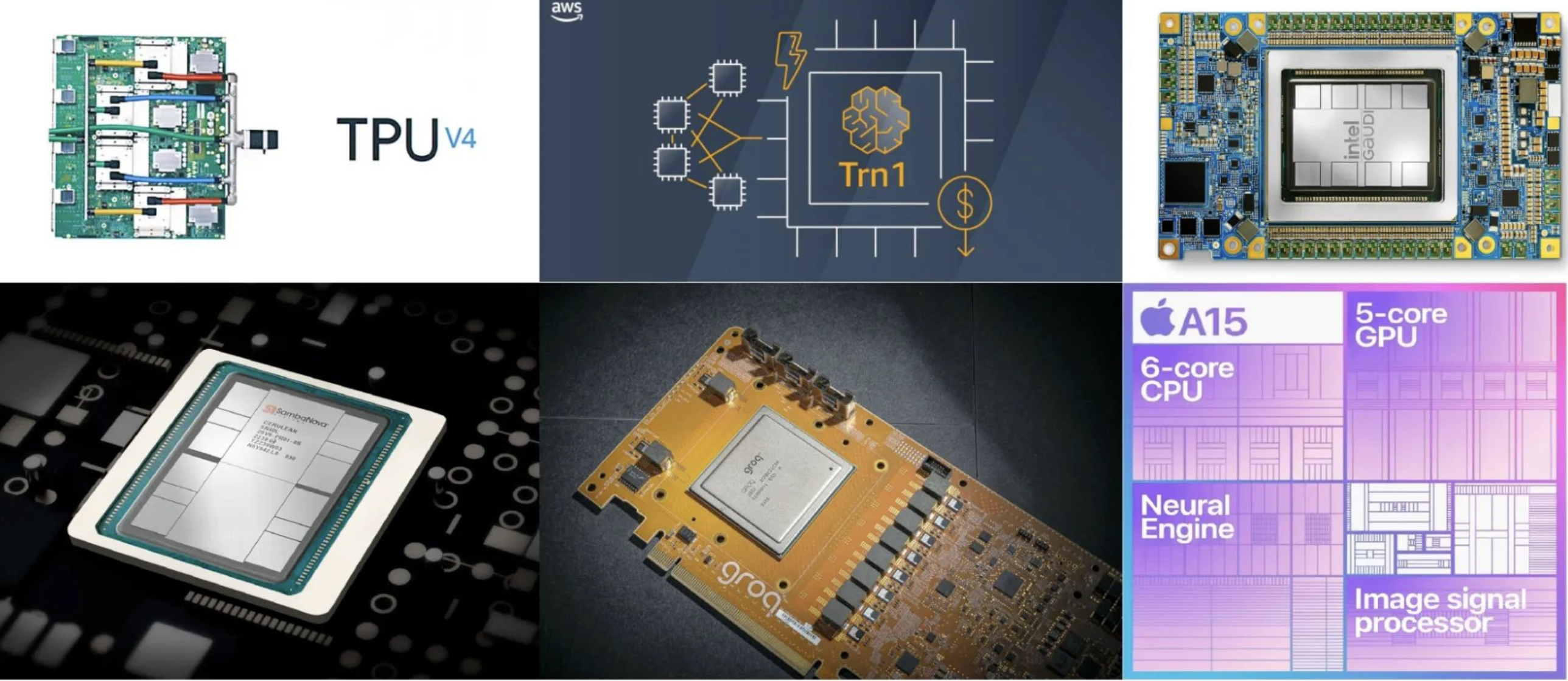

This post is the fifth in our ADRS series, where we explore how AI can be applied to systems research. This post was contributed by a team of our colleagues at UC Berkeley’s SLICE Lab! In this blog post, we go down the stack and explore how AI is being used to speed up AI—at the kernel level. Specifically, we highlight Autocomp, the first LLM-driven code optimizer for low-resource tensor accelerators. Autocomp helps hardware designers extract the full performance of tensor accelerators, outperforming human expert kernel writers by up to 17x on AWS Trainium while being highly portable and easy to use.

Automating Algorithm Discovery: A Case Study in Transaction Scheduling

by: ADRS Team, November 13, 2025

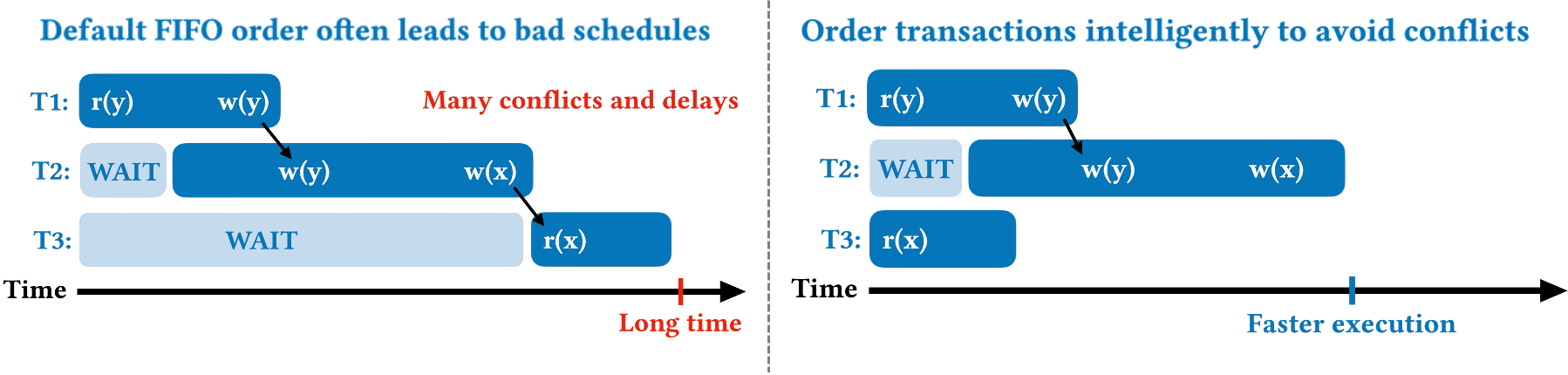

This post is the fourth in our ADRS series. In this blog, we revisit a recent research problem from our VLDB ‘24 paper, Towards Optimal Transaction Scheduling, which minimizes contention for database transactional workloads. We show how we leverage an ADRS framework to discover an algorithm with 34% faster schedules. This case study shows how AI can be used to develop solutions for different problem settings that would otherwise require manual redesign.

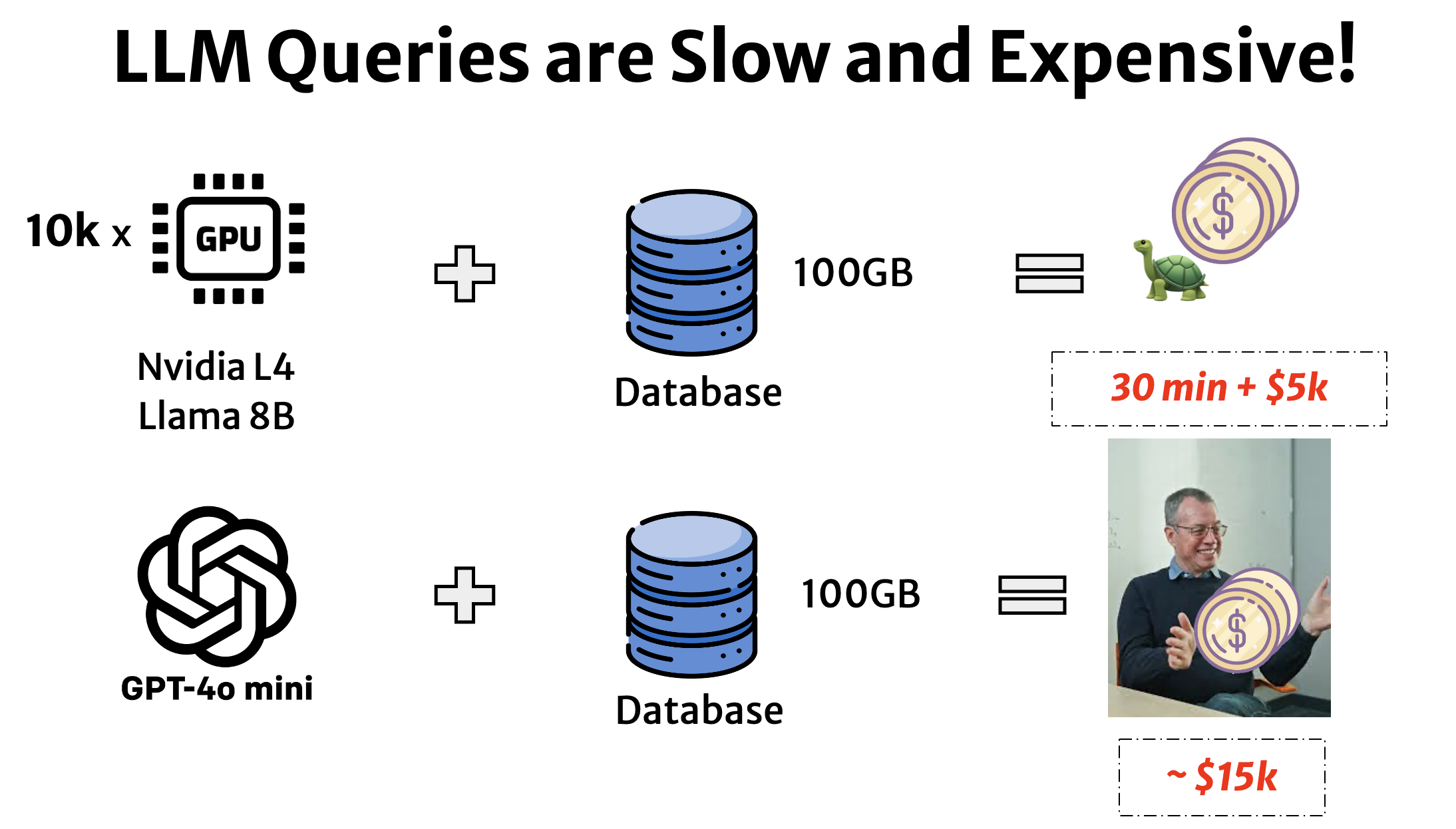

Automating Algorithm Discovery: A Case Study in Optimizing LLM Queries over Relational Workloads

by: ADRS Team, November 6, 2025

This post is the third in our ADRS series, where we use AI to automatically discover better algorithms for real-world systems problems. In this blog, we revisit a recent research challenge from our MLSys’25 paper, “Optimizing LLM Queries in Relational Data Analytics Workloads ”, which tackles the high cost and latency of executing LLM queries over relational data. We show how using OpenEvolve, ADRS autonomously discovered a 3× faster algorithm that achieves the same prefix reuse ratio as the published solution.

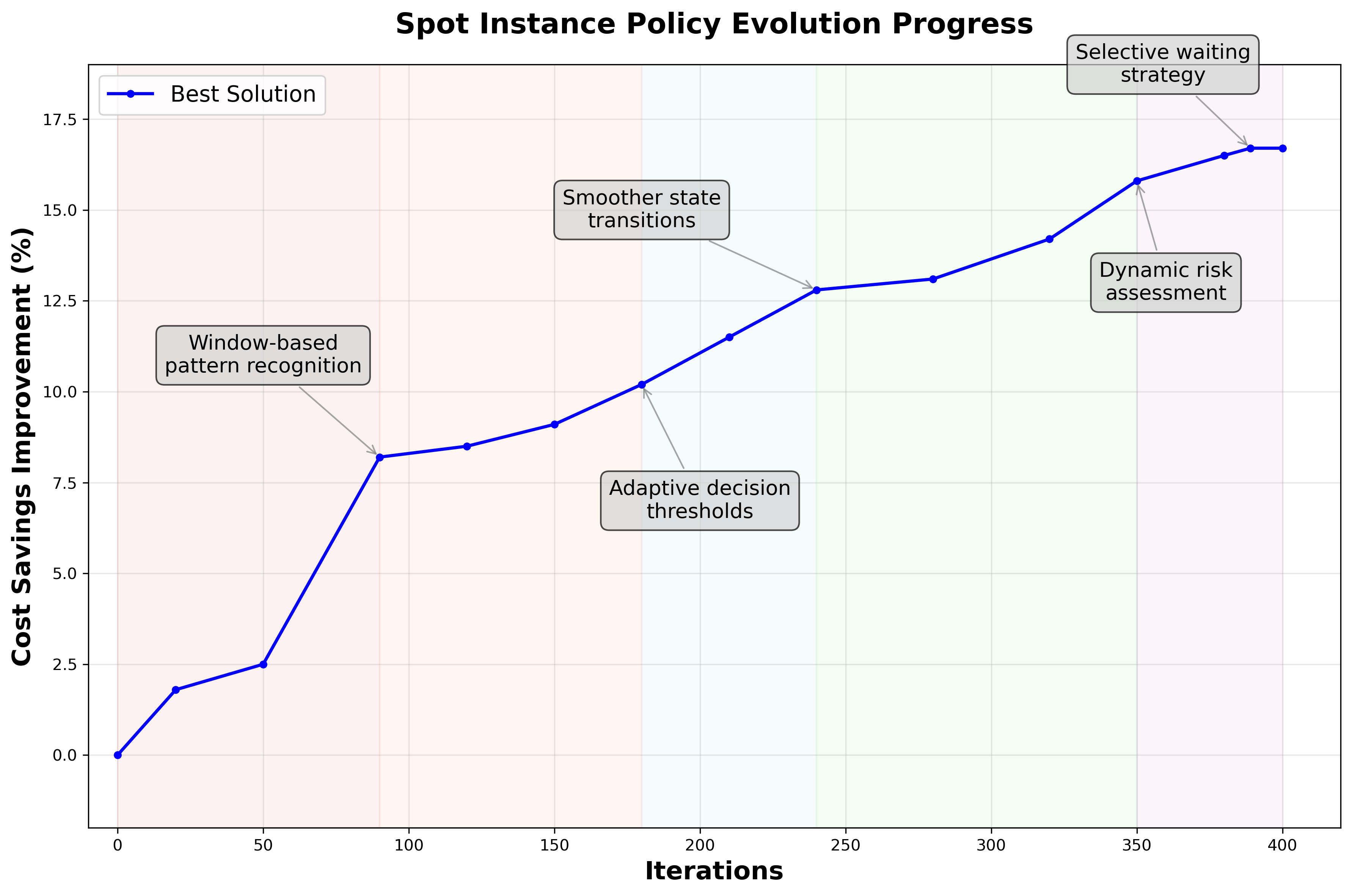

Automating Algorithm Discovery: A Case Study in Spot Instance Scheduling

by: ADRS Team, October 30, 2025

This post is the second in our ADRS series, where we apply AI to optimize complex system problems. Here, we tackle spot instance scheduling, a classic cost-versus-reliability problem in the cloud. Specifically, we demonstrate how OpenEvolve discovers novel algorithms that surpass the algorithm from an NSDI'24 Best Paper, achieving up to 16% and 48% cost savings in a single and multi-region setups, respectively.

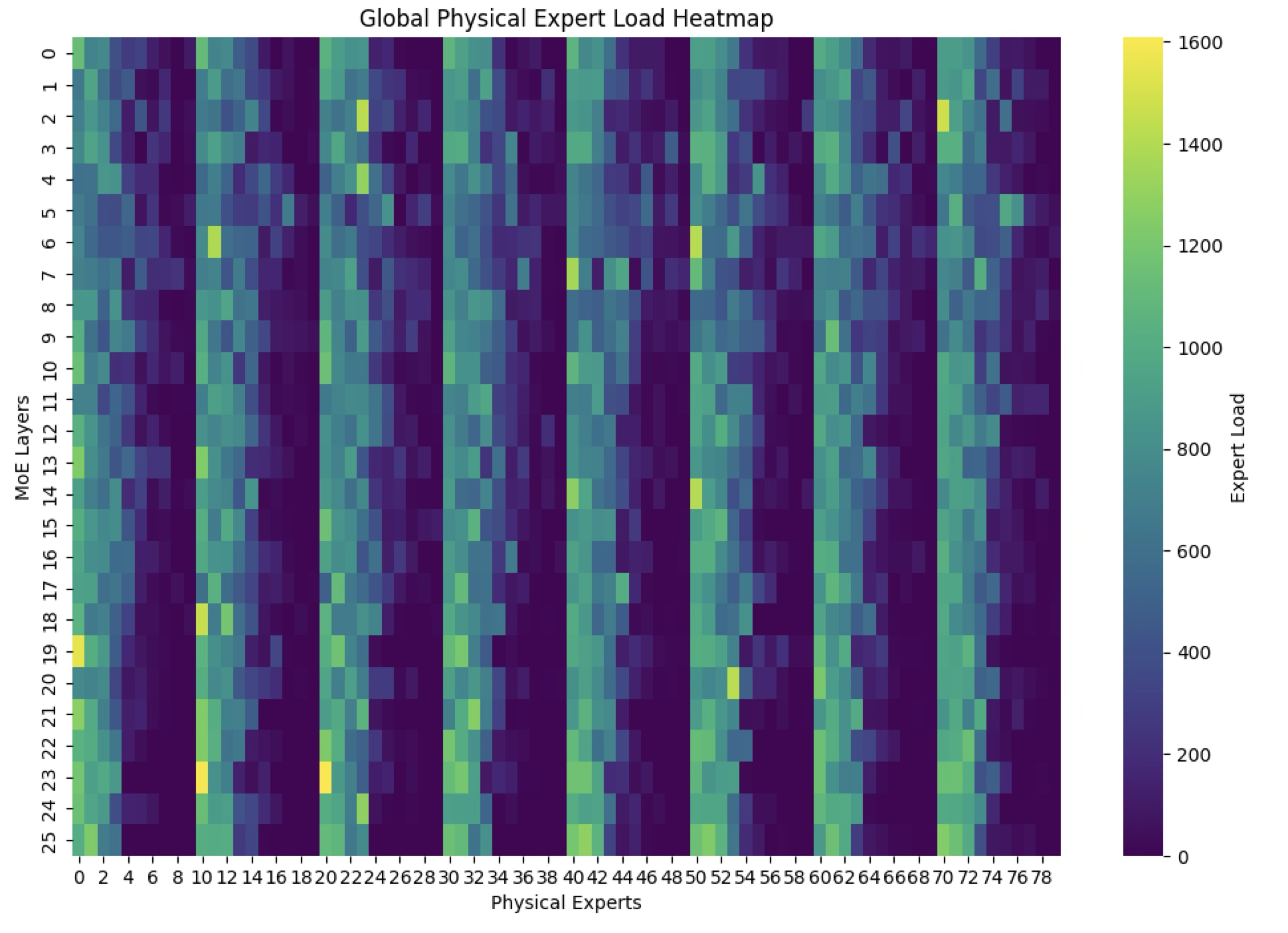

Automating Algorithm Discovery: A Case Study in MoE Load Balancing

by: ADRS Team, October 23, 2025

This post is the first in a series of case studies in which we apply ADRS to optimize performance in various systems. In this blog, we discuss the optimization of a key component in large language model (LLM) inference. Specifically, we demonstrate how OpenEvolve independently discovers and surpasses highly optimized algorithms engineered by human experts to achieve a 5.0x speedup.

Barbarians at The Gate: How AI is Upending Systems Research

by: ADRS Team, October 17, 2025

AI is no longer just tuning systems as a "black box." It's now rewriting their core algorithms by treating the system as a "white box" and discovering solutions that can outperform human experts. This new approach, which we term AI-Driven Research for Systems (ADRS), can automate some of the most tedious parts of reseach.